Bringing Lens onto Google Images

Dec 2019 - Jan 2021

Context

Before I joined the Lens team, Lens only existed as an in-app experience in the Google and Photos apps. I led the Lens web team, which was tasked with building the Lens experience onto different web surfaces, starting with Google Images (images.google.com aka the “Images” tab that accompanies every Google search). This was a key milestone for Lens because it made the experience accessible to all users, regardless of device.

Designing this experience required defining a clear journey and value proposition for what Lens offered on top of what users were already using Image Search for.

Plant

Place

Celebrity

Making images actionable

In addition to identifying things, guiding users with shopping intent is an important journey to support in both Images and Lens. Google Images can often be visually useful but vary in terms of actionability. Through qualitative user studies, we found that many users react negatively to results that don’t offer a path to action, such as Pinterest images that don’t offer information on where to buy the featured products. Thus it has been important for our team to design for this user journey.

Understanding the broader journey

Before diving into the details of the product experience, it was important to take a step back and understand shopping intentions more broadly so that our team could be strategic in what we were going to focus on. What kind of shopping journeys were we planning to support? For those journeys, how do people typically shop, both in store and online? Doing so helped us to clarify the value Lens brought to Image Search in the shopping funnel.

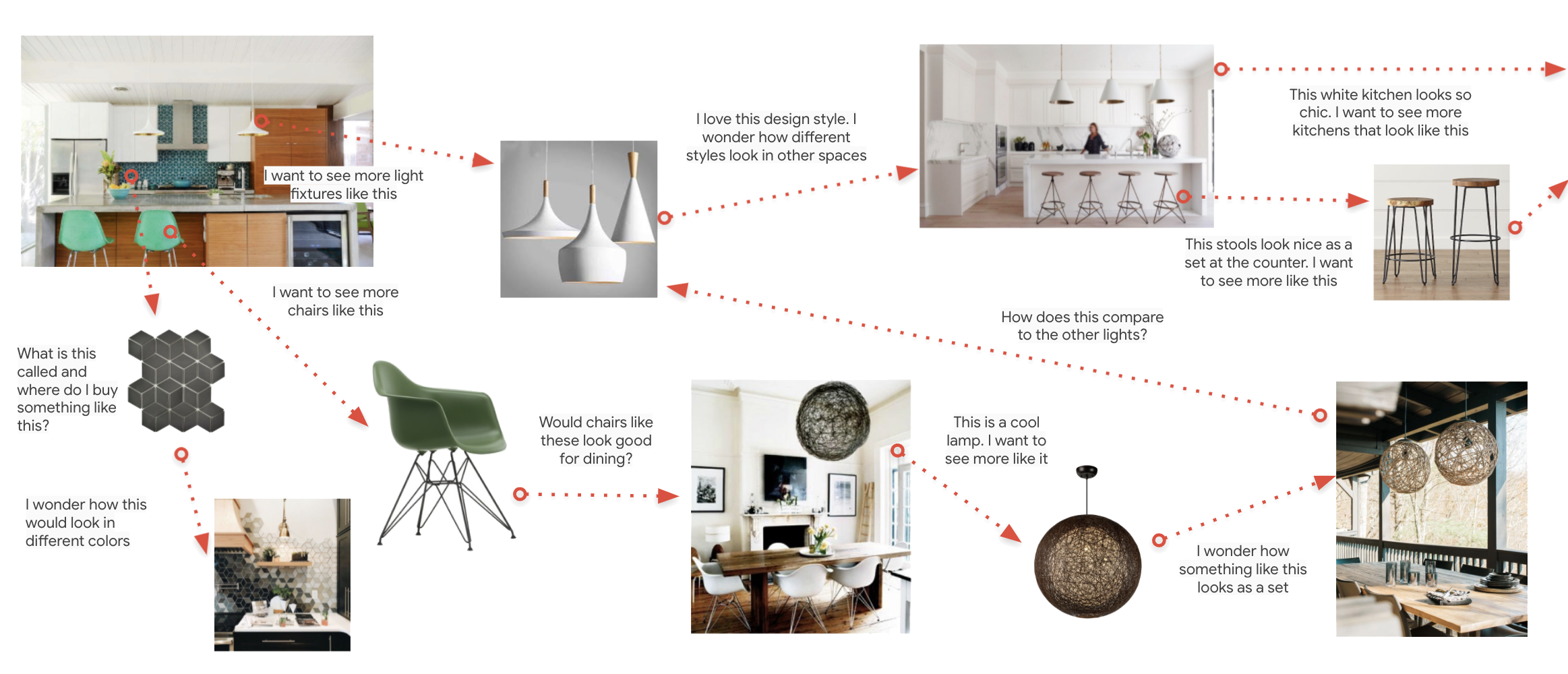

In doing so, it became clear that the existing design framework was good for targeted, intentful journeys, but less good for discovery journeys. Thus, I led a discussion with my team around supporting more flexible shopping journeys that supported the broadening of results, stumbling into new inspiration, and engaging in different browsing paths simultaneously. User research also found that transactions don’t typically happen immediately.

Visioning across teams

I ran a cross-functional design sprint across multiple teams (Image Search, Lens, Shopping) to gather different stakeholders in one place to ground our teams in foundational research, align on shared product goals, and brainstorm design directions together.

Based on these insights, I ran forth to brainstorm and design features that would enable users to browse and shop in ways consistent with how they already do, though often with friction across multiple surfaces.

With a majority of journeys on Google Images categorized as research or discovery-oriented, it became clear that Lens could make a big impact on inspirational imagery, especially of apparel and home decor. However, the current Lens journey is rather transactional, originally designed to help users answer quick questions in the wild. In other words, the way it was designed didn’t work well for shopping journeys.

Launched design

Defining the role of Lens on Google Images

It was important that Lens be designed seamlessly onto Google Images rather than feel like a separate experience. Thus it was crucial to identify exactly what value proposition Lens offered on top of the existing Images surface. Lens helps users take actions on images that they can’t do in Images: for example, identifying something specific in the image that’s not already clear (like a plant pictured in an image of a living room). Thus it should always be accessible, but there should not be an expectation for Lens to be useful in all Images journeys.

Design interactions and user comprehension

When the user is in Lens, they can tap on any object or edit the selection to update the results dynamically. This was designed in tandem as the interactions were being redesigned within the app experience. One key improvement was around surfacing a bounding box to show the selection, which used to be a blue dot. This improved user comprehension both for what Lens is doing (interpreting your selection) and what the user can do (edit the bounding box).

Enabling action on any product

In order to take action and evaluate and/or purchase an item, a user would need to tap a result to visit the merchant’s site. Thus it is important to support easy navigation across these surfaces as a user considers an option and wants to return to evaluate others.

Features for later

As is often true in the product design cycle, not all of the features proposed made it into the first release of the design, but they did influence the roadmap and some are in the queue. Some of the features included:

Broaden, refine, repeat

When a user selects a product within an image, say a chair, Lens surfaces the best visual matches for that specific chair. This enables the user to continue refining their search to make a decision. But what if they’re early in their journey and still considering their entire living room collection? In that case, seeing inspirational images with similar chairs would be useful in helping the user imagine it in different spaces and discover other furniture.

Save for later

One way to address this is through a collections feature. It is unlikely that purchases made via inspirational imagery, especially more expensive items, would be made in one sitting. Thus I proposed surfacing a way for users to save results for consideration for later, compare options easily, and make a purchase when they are ready to.

Semantic relevance

Another way to improve the experience and surface more relevant results is to move past visual similarity and surface semantically relevant results. Is there data that connects products that may not look visually similar but share similar aesthetic qualities? For more on this, check out my work on shopping experiences in Lens.

Launch and impact

We launched the first version of this experience in January 2021 and tripled the amount of Lens traffic per day. Since then, I’ve extended the designs onto desktop as well, which launched in June 2021. Stay tuned for updates! In the meantime, I invite you to visit Google Images on your mobile phone and give the experience a try!